Keep Your Site Fast with Mod_PageSpeed, Now Available for Hostdedi Cloud

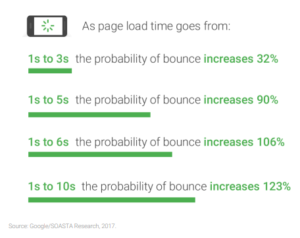

If you’re a developer, or have access to one, Mod_PageSpeed provides a relatively easy path toward addressing speed bumps before they drive away your business, not after.

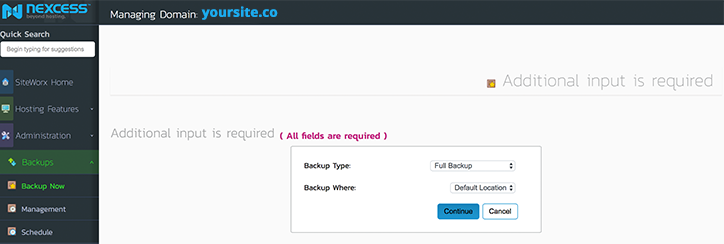

Even better, if you’re a Hostdedi Cloud client, we can help you get Mod_PageSpeed up and running, or your developer can accomplish the same by modifying your htaccess file:

<IfModule pagespeed_module>

ModPagespeed on

ModPagespeedRewriteLevel CoreFilters

</IfModule>

Slow websites wish they were as pretty as this gargantuan gastropod.

What is Mod_PageSpeed?

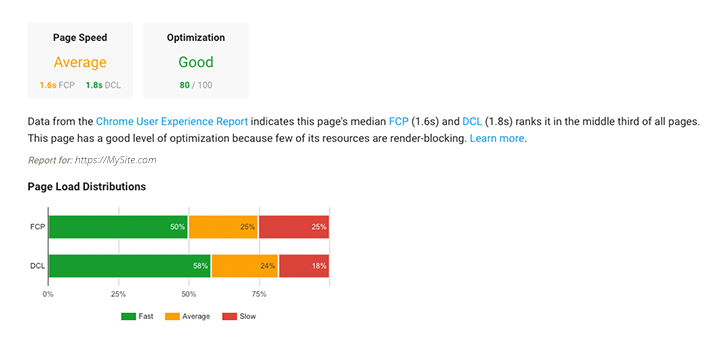

PageSpeed, or Mod_PageSpeed, is an open source plug-in for web servers using Apache or NGINX. Developed by Google as a counterpart of their PageSpeed Insights, which suggests ways to optimize your site, Mod_PageSpeed will automatically deploy many of these same optimizations.

These optimizations span five categories, and generally look for ways to reduce file sizes and apply best practices without changing your content:

- Stylesheets (CSS)

- JavaScript (JS)

- Images

- HTML

- Tracking activity filters

Each of these categories is divided into multiple filters, potentially giving you more direct control over the scope of optimization. For a detailed list of these filters, see the Google PageSpeed Wiki.

Not for Everyone

As you might guess, Mod_PageSpeed isn’t a good option for everyone. If you answer “no” to any of these questions, you may need another approach.

-

- Does your site use mostly dynamic content? Mod_PageSpeed optimizations have almost no effect on dynamic content, or content that adapts to how your site visitors behave. Sites that use static content — content that doesn’t change from visitor to visitor — will see far better results.

- Are you done making short-term changes to your site’s content? Each change you make diminishes the effect of Mod_PageSpeed optimizations. If you’re still making changes, the need to re-configure Mod_PageSpeed each time can bury your development team under additional work and complicate the process.

- Do you already have active website acceleration technology? If so, they tend not to play nice with Mod_PageSpeed, especially when both are optimizing your HTML. While it’s possible to disable HTML optimization in either Mod_PageSpeed or your alternate tech, any misstep will lead to HTML errors and an unpleasant experience for your visitors.

- Do you have access to a developer? PageSpeed is open source, and so it takes some developer know-how to deploy and maintain properly. If you’re not planning upcoming changes to your site, this need is somewhat reduced — just remember any future changes will likely slow down your site without a developer’s assistance.

- If you aren’t running your own Apache or Nginx server, do you host with a company that gives you the tools required for installation of Mod_Pagespeed? If you’re running your own show, you have root access. See Point #4. We can’t speak for other companies, but if you’re a Hostdedi Cloud client, we’ll install it for you and even assist with basic configuration. Or, if you know a developer, they can do it themselves by modifying your .htaccess file.

If you’re not a Hostdedi client, but think Mod_PageSpeed might be a good fit, we once again recommend enlisting the services of a developer to both avoid potential pitfalls and get the most out of it.

If you are a Hostdedi Cloud client, or are just the curious sort, read on to learn a little about what even the default configuration of Mod_Pagespeed can accomplish.

“CoreFilters” for Mod_PageSpeed

For non-developers and for review, remember “filter” is just PageSpeed jargon for a subcategory of the five available categories for optimization: CSS, JS, Images, HTML, and tracking activity filters. If a filter is present, then Mod_PageSpeed is optimizing that element.

We use “CoreFilters” default mode because it is considered safe for use on most websites.

add_head – Adds a <head> tag to the document if not already present

combine_css – Combines multiple CSS elements into one

combine_javascript – Combines multiple script elements into one

convert_meta_tags – Adds a response header for each meta tag with an HTTP-equivalent attribute

extend_cache – Extends cache lifetime of CSS, JavaScript, and image resources that have not otherwise been optimized by signing URLs with a content hash.

fallback_rewrite_css_urls – Rewrites resources referenced in any CSS file that cannot otherwise be parsed and minified

flatten_css_imports – Sets CSS inline by flattening all @import rules

inline_css – Inlines small CSS files into the HTML document

inline_import_to_link – Inlines <style> tags with only CSS @imports by converting them to equivalent <link> tags

inline_javascript – Inlines small JS files into the HTML document

rewrite_css – Rewrites CSS files to remove excess whitespace and comments and, if enabled, rewrites or cache-extends images referenced in CSS files

rewrite_images – Optimizes images by re-encoding them, removing excess pixels, and inlining small images

rewrite_javascript – Rewrites JavaScript files to remove excess whitespace and comments

rewrite_style_attributes_with_url – Rewrite the CSS in-style attributes if it contains the text “url(“ by applying the configured rewrite_css filter to it

If you’re already using Hostdedi Cloud, contact our 24/7 support team to make inquiries or install it for you today!

Posted in:

General

Magento Continues to Dominate the eCommerce Market

Magento Continues to Dominate the eCommerce Market PWA Is the Future

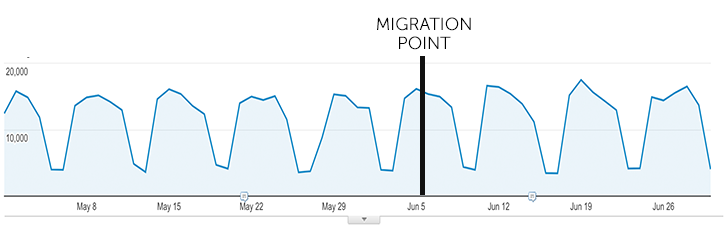

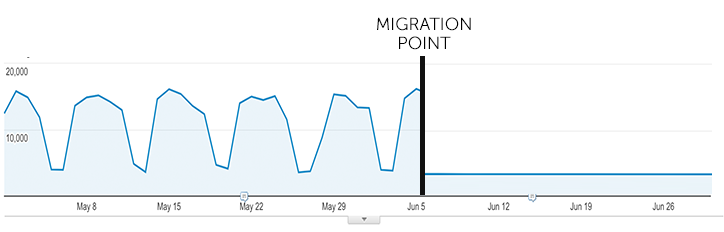

PWA Is the Future Uptime Remains a Primary Concern for Content Producers

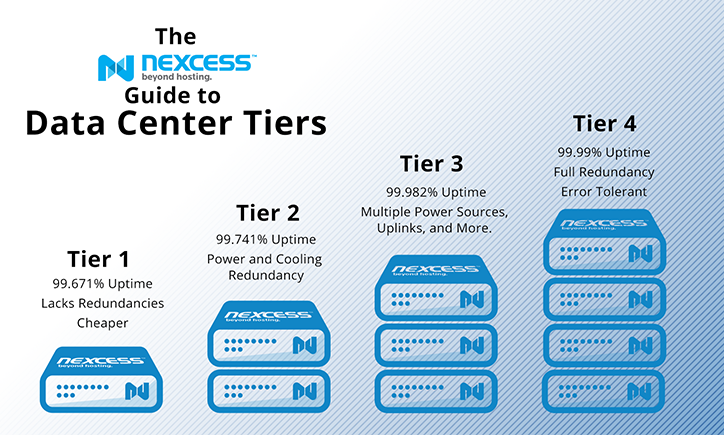

Uptime Remains a Primary Concern for Content Producers